(I’ve been meaning to make this write up post for a while to explain how I went about this effect. Since this effect uses Houdini primarily I’m structuring it to be aimed at beginner’s who may not know Houdini very well, so any veteran Houdini users you have fair warning if I don’t use very much shorthand!)

INTRODUCTION

A couple of months ago a friend of mine Brandon Savanco (@branxord on twitter) was asking about how to go about creating this “plate offset” effect inside Unity on the Real Time VFX discord. The basic premise is that he had a series of cubes laid out in an arch and wanted to offset each cube using a vertex shader. The problem is that normal data is pointed in a different direction for each vertex, making an inflation effect when you use it to offset positions.

Instead what you need to do is, make these normals point in the same direction for each “group of vertices” you want to move.

I’ve learned a good bit of Houdini and had to convert normal data around before, so I thought this effect would be the perfect use for it. There were some bumps along the way though, but in the process I learned a great deal about Unity shaders, and how data is processed and transferred between applications.

STARTING OFF

An important note for the rest of this article: Houdini treats the terms “point” and “vertex” as two different things, while most 3D packages treat them both as the same thing. When we export our geometry from Houdini into Unity and such, we need to make sure we convert any “point” attributes to vertex attributes, or they might not work. Just some forewarning if you try anything here and it acts strange.

Let’s look at the finished effect using the example Brandon first wanted to make:

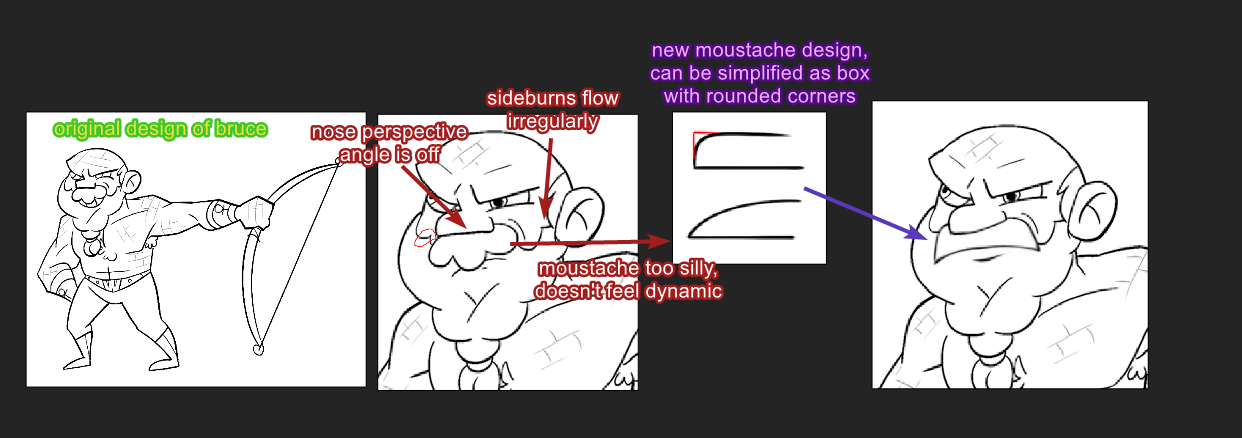

The boxes are moving how they should inside Houdini! But there are a couple quirks present immediately when trying to make this work. Vertex normals are usually used to calculate shading information on the surfaces of geometry.

It’s very important for this purpose that normals are pointing out relative to the angle of the geometry, otherwise you get some wonkiness.

Here is the basic result we wanted in Houdini, I’ve set all of the normals to point upward along the Y axis using a vector of (0,1,0):

Here we are seeing the standard normal data represented by both vertex color and the normal blue lines.

My compromise was to keep the normals as is for shading, and in a second node tree inside Houdini, grab the normals, average them per plate we want to offset, and then transfer them to the unedited plates as a vertex color attribute, @Cd:

The most straightforward way I thought to do this was to:

- generate the normals (as @N point attribute)

- blur @N to get an average of all the normals so they are basically pointed the same direction

- Then we convert @N to @Cd (point attribute acting as vertex color). After this, it’s important to make extra sure that every plate’s vertices are the exact same. An easy way to make that happen is to convert the attribute to a primitive attribute (primitive in Houdini terms is basically a polygon face). This applies the same information across all vertices that are connected to the polygon in question. Then we just convert back to a point attribute.

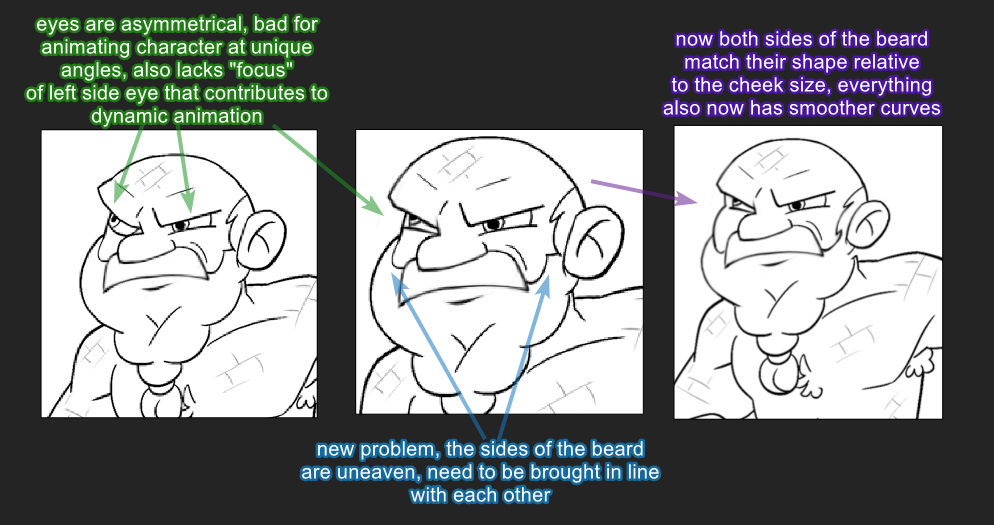

At the end of the hierarchy are two nodes that are doing something I haven’t touched on yet. As part of the compromise solution above, I mentioned that I made a second node tree and then transferred them back to the original plates.

The way I’m doing this is by creating an @id point attribute using the Connectivity SOP node. This will assign a number to represent all vertices that are connected together by edges. This essentially maps out our individual plates vertices as a group we can use later. There’s another more advanced reason we want this @id point attribute that I’ll go into later. So if you are thinking this is a bit redundant right now, don’t worry I know! It’s just nice to have flexibility later on.

So what we do is create two @id point attributes for both node hierarchies, and then we can use them with the Attribute Transfer SOP. This will paste our @Cd/vertex color information to our @N normal plates, but using their shared @id attribute! Very useful for a lot of techniques.

So back to the final effect again, you can see the normals are pointing outward as usual, and you can see the beautiful hue of green cubes. The reason they are shades of green are because the normals we are using to offset are mostly pointed up, and if you think of the direction up in an (x,y,z) sense, you get (0,1,0). Now, think of that as (r,g,b), and you’ll have 1 in the green channel, meaning up is green! This basically proves that our effect is working with a visual indicator.

ADDING MORE ADVANCED MOVEMENT

That was the end of the effect there, but I wanted to play around and try doing more things with this effect because converting data is very addicting. First I made this prettier effect using some rounded tiles with offset sine waves to create some randomness while I changed up the colors. This used extra attributes so we wouldn’t be able to put this in Unity, just some Houdini fun.

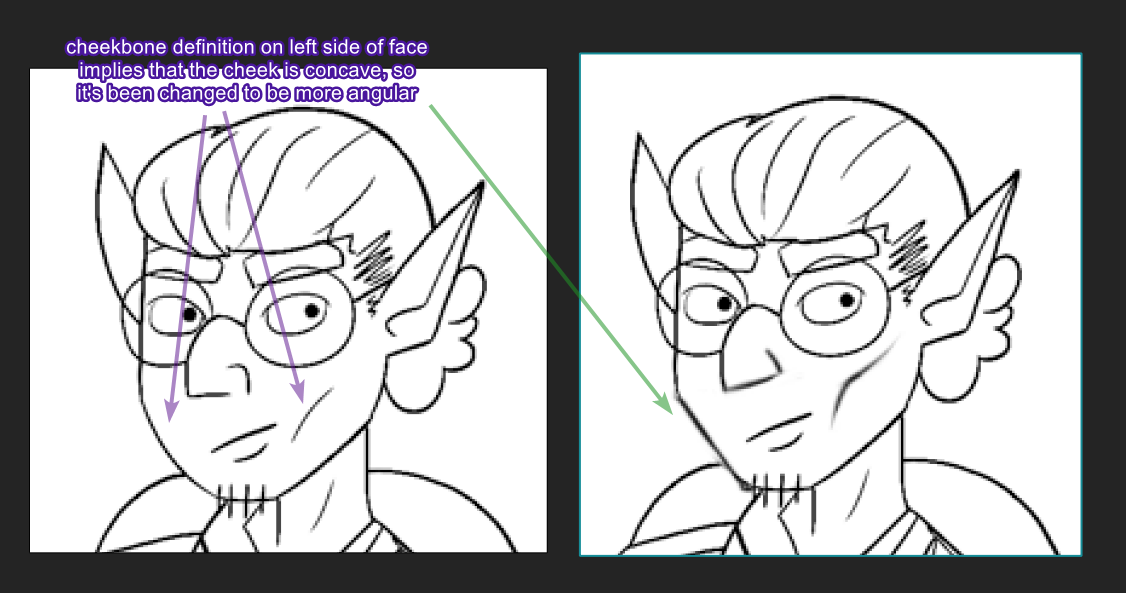

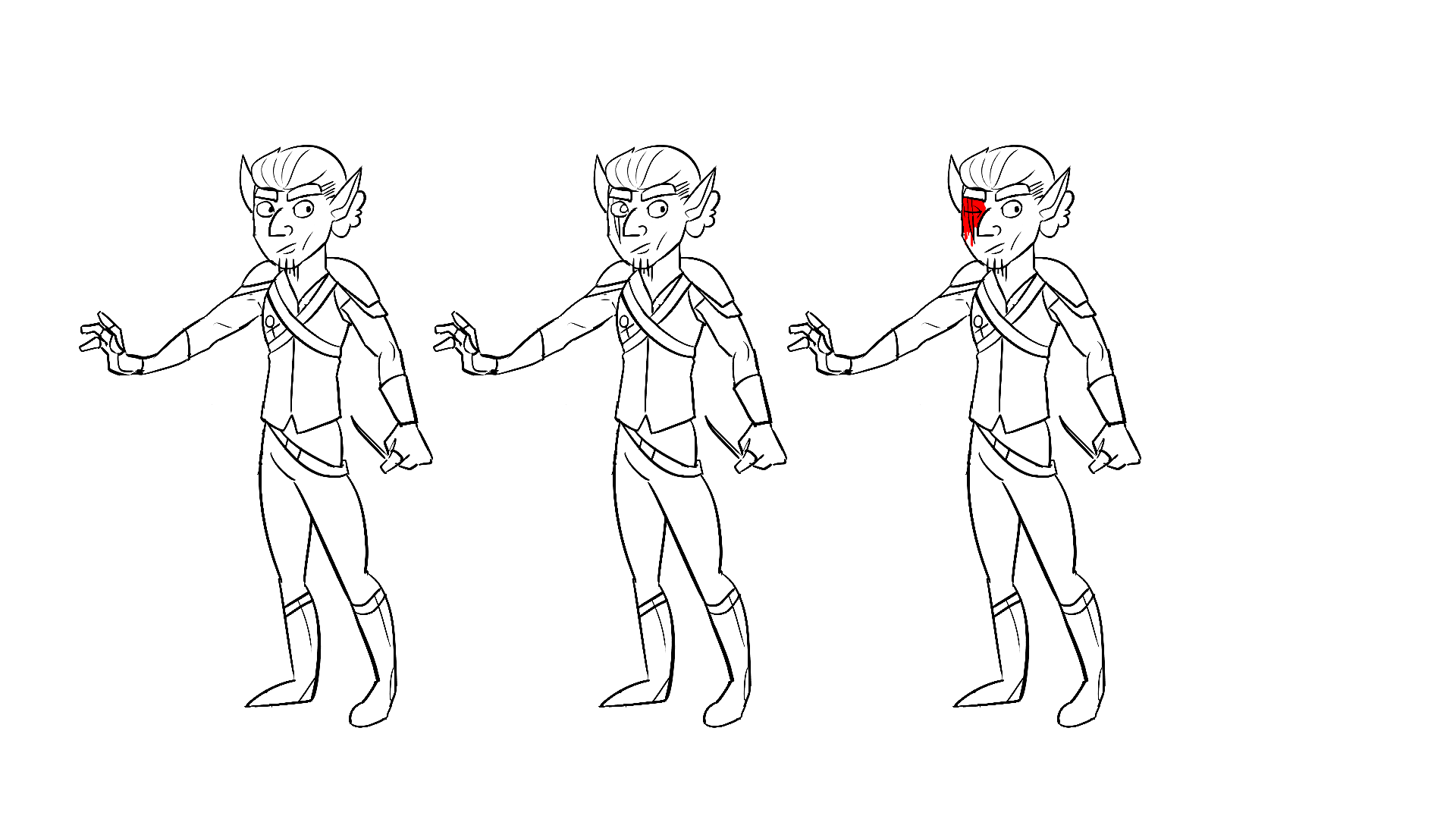

Now we get to the “Armor” part of this effect. In typical Houdini user fashion I used the default pig head as the mesh for this, and then I extruded out some plates to represent armor for our pig warrior. I won’t go into the modeling process too much here, I essentially just extruded polygons out and deleted the rest of the mesh to separate them. I also wanted the plates to have a nicer look so I added a bevel to them and weighted the normals so we could get a nice glint on the edges.

I had some existing shader work I had from following a tutorial from Freya Holmer (@FreyaHolmer, she’s written Shader Forge and created Budget Cuts!), I think it’s a basic blinn or phong shader with some metal shading mixed in. I plugged my armored pig head into the same hierarchy we made before and popped it into unity.

I somehow didn’t get any video of this, but essentially the armor was offsetting, but it was at strange angles. I racked my brain on it for a long time, It looked perfectly fine inside of Houdini, but it was wrong in Unity. I realized later though that the pieces were offsetting towards the positive sides of the axis, like towards the right side for anything move along X, for example. A quick Google search revealed that while Houdini can store normal directions in vertex color, once you export that data into a file it gets clamped to 0 to 1. So now our color has no “opposite direction” to point in, it can only point forward along our axes! The trick here is that we now have to “compress” our color from a -1 to 1 range, into a 0 -1 range. You can do this a few ways, but the way I default to is just to add 1 to @Cd, and then divide @Cd by 2. Now, once you are inside Unity, you must take the vertex color data, subtract it by 0.5, and then multiply by 2. Voila! Now you have -1 to 1 inside Unity. And I screamed when I cracked this code:

Beautiful.

UV’S AND MESSING WITH ARRAYS

But I wanted to go even further. I didn’t like that I was compensating by using a bunch of sin and cos waves on different axes directions to create a faux random offset. I wanted to do an effect where all the plates pushed out in a wave vertically along the pig head. I quickly ran into a problem here, because I couldn’t use the vertex positions, because they were representing individual vertices and thus my plates wouldn’t move as plates. Back to square one like with normals, but the problem is I can’t store this data as vertex color anymore, I already did that for the normal offset. Since I only needed a vertical direction, I figured I didn’t need to store anything in a full vector. I could store it in the UV’s! And just like the normals, we will be averaging the UV positions down to a single point or number, so that every plate has the same data applied to it.

This one took me a lot of time, and the solution I ended up using was a bit convoluted and not necessary for the effect. I thought I screwed up the effect early on but I actually just forgot to convert the @uv attribute in Houdini from a point attribute to a vertex attribute. The difference between “points” and “vertices” is very important in Houdini. Vertices are aware of what edge or polygons they are attached to, and have data relevant to that. It’s pretty important to have that information for things like UVs, because if you want to separate a vertex into two vertices for a texture seam, you need knowledge of what is connected where. Without it, data gets scrambled and fused in crazy ways. WITH THAT IN MIND, this solution is a little crazy, but it is still a useful trick to know.

I wrote some comments (the text in yellow) to clear up some things about the VEX here. If you are new to programming and are freaking out about the foreach loop or the array attribute, I decided to split off my epiphany that I had during that process that helped me learn about arrays into a separate article since this one is getting really long and it’s a bit too much of a departure from the Main Point™ of this breakdown. If you are curious the link is here. If you don’t know how arrays work at all, I highly recommend reading it as it comes from the point of view of someone who struggles with math and logic.

If you don’t want to read all of that, you can just use that code and not worry about it. The nodes after that “uv_average” wrangle with all the code are “truncate_uvs” and “promote_uv_to_vertex”.

“Promote_uv_to_vertex” is really important here, without it the data won’t work properly inside Unity, and you’ll get madness like this:

We are using an attribute promote SOP node to convert our point attribute @uv and converting it to a vertex attribute with the same name.

The “truncate_uvs” isn’t strictly necessary, but I threw it on there to try and solve a different problem that didn’t end up appearing. I basically used the trunc VEX function to snap our @uv point attribute to simpler numbers. So for example, instead of having 2.45617 as a position, it would become 2.45. You can do this with float numbers by multiplying them by the number of decimals you want to keep, in this case, 100. Then you use trunc (which stands for Truncate) to remove all the decimal points from the number. So now we should have 245 with no decimals. Now, we divide this number by the same number we multiplied by, which is 100, and now we have 2.45. Pretty neat! It can be helpful to reduce the amount of precision in decimals when you are inside games, particularly since we don’t need fine differences between our UV positions anyways for this effect.

And now with that, we have UV’s based on our objects alignment in Y space. Inside our shader we can view our UV’s V coordinate and we should see something like this:

And then we can plug it into our normal offset, and we will get the final effect!